I’ve been looking back lately, for professional reasons, at some of what was written about what we then called “ICTs for development” around the turn of the century.

Those were still early days for digital development, long before most of today’s applications were dreamt of, let alone deployed. But hopes were high. People talked about empowerment, about technology enabling individuals, communities and countries to overcome their inequalities, to “leapfrog” developmental disadvantage (there were some fancier phrases).

A time of optimism

It was a time of digital optimism, reflected in international initiatives like the UN ICT Task Force, the G8 DOT Force and the World Summit on the Information Society (WSIS). And it coincided with a world that was also relatively optimistic: one that was more relaxed after the end of the Cold War, that had seen authoritarian regimes, not least apartheid in South Africa, displaced in many countries.

New technology was seen as offering new hope for addressing what seemed to be intractable: the failures of generations of development interventions, the exclusion of minorities from democratic discourse, continued poverty, hunger and lack of opportunity.

Governments, development agencies and many civil society organisations were enthused by this potential transformation. While some did warn of downsides – the need for cybersecurity, datafication’s potential for surveillance – the overwhelming tone was one of optimism.

Why write about this now? For three reasons. Because of what we’ve learnt since then; because of what’s now being said about AI; and because the UN’s considering future digital governance in its planned Global Digital Compact (GDC).

What we have learnt

We’ve learnt, I hope, that optimism is not enough. People generally welcome the potential for digital technologies to transform the way things are done for the better, but there’s much greater recognition that they can also transform things for the worse.

Optimism remains a powerful ethos within the digital community. Businesses see opportunities for profit. Governments are keen to deploy technology, either to save money or to improve services.

But things have changed. We’re much more conscious now that things don’t always work out well.

Digitalisation has enabled many positive improvements in areas of development, but it’s not delivered greater equality and in some cases has been found to increase inequality.

Empowerment and surveillance have proved they can go hand in hand. Rights can be diminished as well as enhanced by digital technology. It’s questionable how far the “Arab Spring” was fuelled online, but it’s not sustained lasting political change. Populist soundbites have proved more influential online than nuanced political debate.

And the wider global context is now much less amenable to international agreement. It’s beset by military conflicts in several regions and more countries. The rules-based system that’s underpinned international relations for many years is increasingly in question. Underlying developmental problems haven’t gone away, but been intensified by understanding of new existential threats associated with the climate.

Today’s is not an optimistic age, in short; it’s much more pessimistic.

A new inflection point

I’ve suggested elsewhere that we’re at a new inflection point in digital development, thanks to the rapid growth in artificial intelligence and other frontier technologies. There’s a risk here of repeating some of the heights of optimism that I’ve just described.

I listened recently to a speech by an international figure in development whose enthusiasm for AI seemed almost unbounded, and was very reminiscent of the tone of discourse on ICT4D around the turn of the century.

‘Digital transformation’, he argued, could solve the developmental problems that previous generations of development activity (and indeed technology) had failed to solve. It would enable us, almost, to jettison the past and everything experience had taught us. A new world beckoned and we should embrace it rather than worrying what it might bring. Here at last, he seemed to say, was the ‘silver bullet’ that we’d hoped for all those years ago.

I’d not heard such a degree of optimism about technology from someone in the development sector for some time, though it reflected some of the rhetoric emerging from some AI proponents. What worried me about it was the lack of attention that it paid to the complexity of rapid technological change – and therefore, because technology does not evolve in isolation, of social, economic, political and cultural change.

Human and digital development

The goal of human development, in this context, is not maximising digital development but optimising it. To do that we need to consider and to understand potential consequences, not just assume or hope that they’ll be good. And we need to think through how they can be optimised, not just leave that to the whims of digital technologists and businesses.

There’s debate around this amongst leading figures in AI at present. Some, like Yann LeCun – pioneer of deep learning, Chief AI Scientist at Meta/Facebook – are immensely optimistic and disparage those who have concerns about its risks (see his self-declared ‘tirade against AI doomers’ at the World Economic Forum). Others, like his former colleague Geoffrey Hinton, are much more concerned about the unanticipated impacts of AI on our societies, stressing the need for scientists to take responsibility for what they develop and society to prioritise the public good.

A Global Compact

The United Nations is currently discussing what should be in a “Global Digital Compact” which will form part of the planned “Pact for the Future” that its Secretary-General hopes will be agreed at a Summit of the Future in September.

What’s called the “zero draft” for the GDC – the starting-point for its negotiation – was published in April. It’s an attempt to “outline shared principles for an open, free and secure future for all.”

It focuses on a number of themes that are essentially concerned with digital governance that’s suited to the future: closing digital divides and accelerating progress towards the Sustainable Development Goals; inclusion in the digital economy; ‘an inclusive, open, safe and secure digital space’; equitable data governance; and the governance of emerging technologies including AI.

In each of these it identifies opportunities and risks, proposes a number of commitments and suggests actions that should or could be taken now.

Many in the digital community see this as a proposal that is concerned primarily with the digital world. For other stakeholders in the UN system, though, it is about the contribution of digitalisation to broader global goals: one element towards the Pact for the Future, which is concerned with sustainable development, peace and security, international cooperation and “transforming global governance”. Its zero draft is here.

Space for both optimism and pessimism

There is space here for both optimism and pessimism, for addressing both opportunities and risks, for considering what has been achieved (for good or ill) by digitalisation since its early age of optimism, what could be achieved (for good or ill) by the new waves of technology that are now imminent.

Since the century began, the potential of the tech we know has gone from being something that we could anticipate (whose impacts were in fact highly uncertain) to something that’s pervasive (whose impacts we much better understand).

AI and other frontier technologies are today at the anticipation stage that basic digital technologies were at back then, their impacts still highly uncertain.

We can apply experience from the last three decades to work out some of what we ought to do to optimise their impacts: to maximise the positives and minimise or mitigate the risks. There’s much more understanding now of the need to do this and about the need for governance and regulation in pursuit of public good (though not everyone agrees).

There aren’t the international norms and frameworks, though, that will be required to manage this. Addressing impacts that reach beyond technology can’t be left to institutions that are primarily concerned with tech. All international institutions need to reflect the new and intensified pervasiveness of digital technology.

International relations today are not well-suited to achieving new agreements. Hence the importance – and the challenge – of the GDC.

Cyber-realism

In a blog post on this site in 2017, I wrote about the need to move on from cyber-optimism and cyber-pessimism to cyber-realism. I suggested this required four shifts in thinking:

- that we should stop thinking that digital technology was ‘good’ or ‘bad’ in itself but recognise that it creates opportunities and threats whose impact can be positive or negative (or both);

- that we should consider in advance how we want innovations to change our lives rather than letting technology take those decisions for us;

- that we should recognise we, in our communities and our societies, have the right to shape the Information Society, and that we need to exercise that right if we want it to respect human rights, protect the environment, enable sustainable development, promote diversity, limit capacity for conflict, and achieve greater equality;

- that we should consider today’s and tomorrow’s technological developments from the perspective of the future: ‘that we should analyse the future rather than gaze at it in wonder or in fear.’

Polarised debate between digital optimists and digital pessimists, digital champions and digital detractors, digital insiders and the wider world community, is proving unproductive. Digitalisation is happening at pace, and existing institutional frameworks are struggling to keep up with it.

We need, I’d argue, much more open discourse about the relationship between human and digital development, discourse that’s more concerned with optimising human outcomes than with maximising digital opportunities.

That discourse needs to be inclusive. Not confined to digital insiders but involving all communities of expertise and practice. In development, environment, human rights, conflict management, health, education and every other areas of human development.

And it requires institutional innovation. Fresh thinking’s needed – from diplomats, digital insiders and those in all those areas of human development – about the frameworks that are best suited to the common good. Optimists and pessimists should both agree with that.

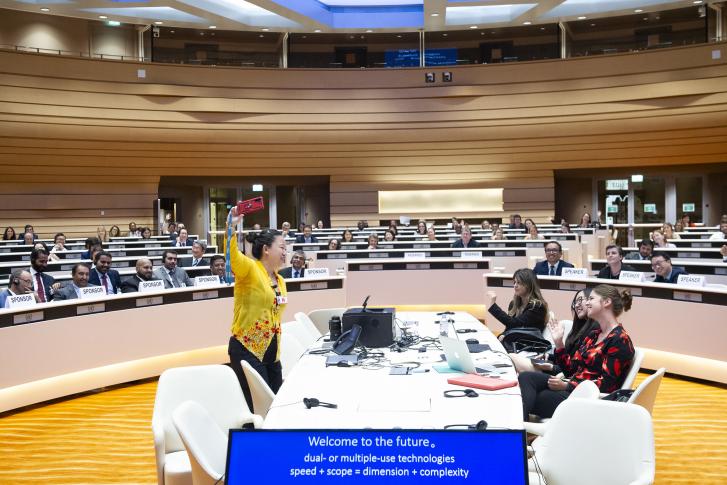

Image: UNIDIR 2019 Innovations Dialogue, Digital Technologies and International Security by UN Geneva (CC BY-NC-ND 2.0 DEED)